- 2021-12-1

- adjective for consciousness

According to them a research paradigm is an all-encompassing system of interrelated practice and thinking Evaluation - how well it works? We offer this definition of process evaluation developed by the Federal The methods of evaluating change and improvement strategies are not well described. This publication describes two of the basic choices that must be made when designing an evaluation. relationships • Researchers try to identify the . the evaluation process, the evaluation team can build on the strength of each type of data collection and minimize the weaknesses of any single approach. has a very long history, evaluation research which relies on scientific methods is a young discipline that has grown massively in recent years (Spiel, 2001). View Lecture 11_Fall 2021_Section 1.pdf from GU 2349 at New York University. Download full-text PDF. We offer this definition of process evaluation developed by the Federal The main point of difference between the two, is their purpose.

Weiss [15] argues: But evaluation is a rational enterprise that takes place in a political context. Step 6: Design Evaluation Research. On the next page is a checklist to help you identify some options. of research design and methods. These initial decisions reflect assumptions about the social world, how science should be conducted, and what constitutes legitimate problems, solutions, and criteria of "proof." Different approaches to research encompass both theory and method. Ian Atkinson. 2. This phase involves several decisions and assessments, including selecting a design and proposed data collection strategies. Strategies for accessing and managing publicly available higher education data. It is concluded that evaluation research should be a rigorous, systematic process that involves collecting data about organizations, processes, programs, services, and/or resources that enhance knowledge and decision making and lead to practical applications. Evaluative Research, Evaluation, Research Methods . (Keywords: developmental research, evaluation research instructional design, research methods, theory to practice) 23 These designs are often referred to as patched-up research designs (Poister, 1978), and usually, they do not test all the causal linkages in a logic model. At a feed. Instructor: Michael Quinn Patton, PhD Description: Qualitative inquiries use in-depth interviews, focus groups, observational methods, document analysis, and case studies to provide rich descriptions of people, programs, and community processes.To be credible and useful, the unique sampling, design, and analysis approaches of qualitative methods must be . The design First, the technical definition begins 1991) but a comprehensive research strategy. RESEARCH 2 Research refers to the systematic process of group assignment, selection, and data collection techniques. Evaluation research can be defined as a type of study that uses standard social research methods for evaluative purposes, as a specific . From: International Encyclopedia of Education . Lecture conducted from the University of North Carolina at Chapel Hill. This type of research design is purely on a theoretical basis where the individual collects data, analyses, prepares and then presents it in an understandable manner. It is simply. (This step is skipped if an already existing evaluation tool is used.) The range of possible benefits that carefully designed mixed-method Bookmark File PDF Design Evaluation And Analysis Of Questionnaires For Survey Research design and evaluation, and evaluation of effectiveness and implementation of interventions in a single volume Reviews the decision-making steps and the knowledge needed to inform decisions in research and practice Discusses the Ethically, communicating results opens an experiment or design to accurate, unbiased evaluation. The choice of evaluation design requires a careful analysis of the nature of the program, the purpose and context of the evaluation, and the environment within which it operates. research methods and analysis. purposes of research can be seen as part of step two, research method, in which the kind of research, the approach to research, the design, participants/subjects, measuring instruments, the procedure, ethical aspects and manner of data analysis can be distinguished. Laboratory research aimed at discovery of new knowledge b. Searching for applications of new research findings or other knowledge c. Conceptual formulation and design of possible product or process alternatives d. Testing in search for or evaluation of product or process alternatives e. an evaluation design or approach (experimental, quasi-experimental, non-experimental). speed of 350 mm/s, brush length of 80 mm, brush clearance of 22 mm, and brush diameter. The design and conduct of a range of experimental and non-experimental quantitative designs are considered. The Politics of Evaluation Weiss refers to evaluation research as a rational enterprise. • "Evaluation is the systematic process of delineating, obtaining, reporting, and applying descriptive and As noted, the researcher needs to determine the resources necessary to conduct the study, both in the consideration of which questions are researchable as well as Evaluation vs. Research Bumper Stickers Mathison (2007) Evaluation particularizes, research generalizes. As noted, the researcher needs to determine the resources necessary to conduct the study, both in the consideration of which questions are researchable as well as C.ethodology - M In this section, the state is to describe in detail the proposed research methodology. Research - what's so? The design For example, a survey of participants might be administered after they complete a workshop. ii. creating a research design, and collecting and analyzing the data.

It is making judgments about value, effectiveness . Study designs may be experimental, quasi-experimental or non . research design and plan. the diversity of evaluation questions of interest to stakehold-ers—there is no single "best" evaluation design that will work in all situations. This section contains samples of evaluation instruments that can be generated for each evaluation option. Qualitative Evaluation Methods. • Non-Experimental Designs that investigate . structure the research, to show how all of the major parts of the research project - the samples or. design. Choosing designs and methods for impact evaluation 20 3.1 A framework for designing impact evaluations 20 3.2 Resources and constraints 21 3.3 Nature of what is being evaluated 22 3.4 Nature of the impact evaluation 24 3.5 Impact evaluation and other types of evaluation 27 4. The type of evaluation design you choose will depend on the questions you are asking. Evaluation research is defined as a form of disciplined and systematic inquiry that is carried out to arrive at an assessment or appraisal of an object, program, practice, activity, or system with the purpose of providing information that will be of use in decision making. Authors' credits . Evaluation research is a type of applied research, and so it is intended to have some real-world effect. research designs in an evaluation, and test different parts of the program logic with each one. Read full-text. speed of 350 mm/s, brush length of 80 mm, brush clearance of 22 mm, and brush diameter. Research Methods is a text by Dr. Christopher L. Heffner that focuses on the basics of research design and the critical analysis of professional research in the social sciences from developing a theory, selecting subjects, and testing subjects to performing statistical analysis and writing the research report. Some questions you may address with an outcome evaluation include: . We organize our discussion of these various research designs by how they secure internal validity: in this view, the RD design can been seen as a close "cousin" of the randomized experiment. Impact Evaluation). Once the purpose of the evaluation is clear, a small Retrospective Pretest. in applied microeconomic research, the so-called Regression Discontinuity (RD) Design of Thistlethwaite and Campbell (1960). This understanding can lead to greater trust by their partners and fewer conflicts or misunderstandings down the road. The challenge is to design a survey that accomplishes its purpose and avoids the following common errors:

Evaluation Research. The main connection between evaluation and research is that both aim to answer some questions in a systematic way. b. determines the evidence that will be collected for each chosen criterion. True and Pre-Experimental Designs for Evaluation. The design also might help the other engineers solve problems they are having with their own designs or inspire them with a new design. Assessment, Evaluation, and DescriptLe Research Types of Educational Research23 Summary 24 Exercises 25 References 26 22 2 Selecting a Problem and Preparing a Research Proposal 29 The Academic Research Problem30 Levels of Research Projects 31 Sources of Problems 31 Evaluating the Problem 34 The Research Proposal 36 Ethics in Human . Sample A—Post Evaluation Only • Post Evaluation • Follow-Up Evaluation • Instructor Information Sheet Sample B—Pre and Post Evaluation At a feed. 3. Whether you are a highly Design and Methods Design refers to the overall structure of the evaluation: how indicators measured for the Regardless of your level of expertise, as you plan your evaluation it may be useful to involve another evaluator with advanced training in evaluation and research design and methods. This phase involves several decisions and assessments, including selecting a design and proposed data collection strategies. It often adopts social research methods to gather and analyze useful information about organizational processes and products. Designing Evaluation for Education Projects (.pdf), NOAA Office of Education and Sustainable Development. It also helps protect the intellectual rights of the scientists or engineers sharing the design. highest at 0 . evaluation design that is most appropriate for a program given the program's service model, the evaluation questions the program wishes to address, and the program's resources for conducting an evaluation.4 …information for program providers who are considering evaluating their programs. Evaluations answer specific questions about program performance and may focus on assessing program operations or results. It is simply. You state a problem or goal and then develop a disciplined plan to investigate one or more questions. According to Arifin, evaluation research is a research that has an aim to provide information for decision maker (policy maker) related to a power or strength of a program, seen from its effectiveness, cost, device, etc. In the context of an impact/outcome evaluation, study design is the approach used to systematically investigate the effects of an intervention or a program. Appropriate research methods and metrics capture data, document results, and allow analysis and reporting. Your objective should be to maximize the reliability and the validity of your evaluation results. To design an outcome evaluation, begin with a review of the outcome components of your logic model (i.e., the right side). The research design refers to the overall strategy that you choose to integrate the different components of the study in a coherent and logical way, thereby, ensuring you will effectively address the research problem; it constitutes the blueprint for the collection, measurement, and analysis of data. Research can be experimental, quasi-experimental, or non-experimental (e.g., a quantitative method 1 and experimental research 2). causal. Evaluation results can be used to maintain or improve program quality and to Research- how it works? 4 . How To Design Evaluate Research In Education 6th Edition "Comprising more than 500 entries, the Encyclopedia of Research Design explains how to make decisions about research design, undertake research projects in an ethical manner, interpret and draw valid inferences from data, and evaluate experiment design strategies and results. Best design for proving a cause and effect relationship between the intervention and outcomes R O 1 X O 2 R 2 O 1 O Resources: Brewer, N. (September 27, 2011). The design of any study begins with the selection of a topic and a research methodology.

research design and plan. Also known as program evaluation, evaluation research is a common research design that entails carrying out a structured assessment of the value of resources committed to a project or specific goal. One-Shot Design.In using this design, the evaluator gathers data following an intervention or program. The evaluation design matrix is an essential tool for planning and organizing an evaluation. Study design (also referred to as research design) refers to the different study types used in research and evaluation. Many methods like surveys and experiments can be used to do evaluation research. evaluation and to select the approach most suitable for answering the questions at hand, rather than reflexively calling for both (Patton, 2002; Schorr & Farrow, 2011). a. A multimethod approach to evaluation can increase both the validity and the reliability of evaluation data. The methodology that is involved in evaluation research is managerial and provides management assessments, impact studies, cost benefit information, or critical path analysis. and integrates multiple perspectives in the design and evaluation of projects . 3. Evaluation is designed to improve something, while research is designed to prove something. 2. In both . The first is about the choice of an evaluation's design. The second concerns choosing a quantitative, qualitative, or . GPH-GU 2349-001 PROGRAM PLANNING & EVALUATION ARIADNA CAPASSO Evaluation research design Planning an Outcome He specialises in employment and labour market research and has a particular interest in the design and implementation of employment programme evaluations. In Descriptive Research Design, the scholar explains/describes the situation or case in depth in their research materials. evaluation research, let us fi rst briefl y consider the question of why evalu-ation is important and then identify the desirable characteristics of evalu-ation, the steps involved in planning an evaluation study, and the general approaches to evaluation. Evaluation Design . 4. In this sense, the case study been worked out prior to the first edition of this book (Yin, 19810, 1981b) is not either a data collection tactic or merely a design feature alone (Stoecker, but now may be restated in two ways. Both contribute to our learning and enhance our knowledge. evaluation. Recent Developments In The Design Construction And Evaluation Of Digital Libraries Case Studies written by Cool, Colleen and has been published by IGI Global this book supported file pdf, txt, epub, kindle and other format this book has been release on 2013-02-28 with Language Arts & Disciplines categories. A. ESF impact evaluation: research design and scoping study. How-ever, the method is often misused and abused.

Evaluation is a systematic process to understand what a program does and how well the program does it. The focus is on showing that the evaluation meets the prevailing standards Evaluation of design artefacts and design theories is a key activity in Design Science Research (DSR), as it provides feedback for further development and (if done correctly) assures the rigour of the research. Process evaluation, as an emerging area of evaluation research, is generally associated with qualitative research methods, though one might argue that a quantitative approach, as will be discussed, can also yield important insights. REV 6/13/2006 A-EV037 1. The manual offers a way of thinking about project design and evaluation, rather than just a set of instructions and forms to do it. There are many possible research designs and plans. A design is used to. • Introduction to Research / Evaluation Methods • Publicly Available Datasets Learning Outcomes: Participants completing this session will take away the following outcomes: 1. Key Tasks ofEvaluation Design The design of an evaluation has to: • describe the evaluand and derive the work tasks and challenges, • give advices for formulating objectives and tasks of the evaluation (including how evaluation criteria are developed), • stipulates the manner in which the evaluation is to be conducted (including data collection, analyses, assessment , interpretation),

questions" Judgmental!quality!" Action!setting" Role!con5lictsmore!likely!" Often . As part of this Ian has contributed to a range is an Associate Director at Ecorys. 4 EVALUATION PRINCIPLES AND PRACTICES History Recently, the Foundation adopted a common strategic framework to be used across all its program areas: Outcome-focused Grantmaking (OFG).2 Monitoring and evaluation is the framework's ninth element, but expectations about what Chapter 4: Research methodology and design 292 4.2 Research Paradigm According to TerreBlanche and Durrheim (1999), the research process has three major dimensions: ontology1, epistemology2 and methodology 3. Project STAR What type of evaluation design will meet my needs? Download citation. APPROACH 3 The approach is the first step to creating structure to the design, and it details (a) a October 2007 Publication #2007-31 Assessment vs. evaluation Depending on the area of study, authority or reference consulted, assessment and evaluation may be treated as synonyms or as distinctly different concepts. Evaluation Design The following Evaluation Purpose Statement describes the focus and anticipated outcomes of the evaluation: The purpose of this evaluation is to demonstrate the effectiveness of this online course in preparing adult learners for success in the 21st Century online classroom. The following are brief descriptions of the most commonly used evaluation (and research) designs. An impact evaluation may be commissioned to inform decisions about making changes to a programme or policy (i.e., formative evaluation) or whether to continue, terminate, replicate or scale up a programme or policy (i.e., summative evaluation). Techniques for evaluating the efficacy of an education policy and/or program . Design the methods used for the evaluation. (2) When different methods are used to answer the same elements of a single question . Evaluation - so what? In education, assessment is widely recognized as an ongoing process aimed at understanding and improving student learning. Note that the type of the instrument generated depends on the evaluation options selected. evaluation design. of 0.8 mm, the calyx removal S/N ratio was 13.86%, but the damage S/N ratio was the. Program Evaluation & Research Keith G. Diem, Ph.D., Program Leader in Educational Design S urveys can be an effective means to collect data needed for research and evaluation. Evaluation Designs are the framework of the evaluation plan . research." But its broader purpose is one of development — to enhance the ability of people and institutions in developing countries to identify key challenges and generate effective responses to them. Two general However, she highlights the political constraints and resistances that exist. Partners can become familiar with the principles of: 66 • Ethics • Confidentiality • Accountability • Competency • Relevancy • Objectivity • Independence The evaluator alone (or the evaluator with the client): a. chooses criteria to use for the evaluation based on the guidelines in Step 1e. Depending on the research questions to be answered, the best approach may be quantitative, qualitative, or some combination of the two. Research Design This study is descriptive-evaluative research since the result of the study . It is the most generalised form of research design. 3.1 Research Questions differences that already exists within individuals or groups Evaluation research aims in providing the researcher with the assessments of the past, present or proposed programs of action. Program Evaluation Defined • "Evaluation: Systematic investigation of the value, importance, or significance of something or someone along defined dimensions"(Yarbrough, Shulha, Hopson, & Caruthers, 2011, p. 287). Impact/Effectiveness Evaluation Note that if the evaluation will include more than one impact study design (e.g., a student-level RCT testing the impact of one component of the intervention and a QED comparing intervention and comparison schools), it's helpful to repeat sections 3.1 through 3.7 below for each design. Chapter 1: The Importance of Evaluation Design Page 3 GAO-12-208G A program evaluation is a systematic study using research methods to collect and analyze data to assess how well a program is working and why. The process of evaluation research consisting of data analysis and reporting is a rigorous, systematic process that involves collecting data about organizations . in applied microeconomic research, the so-called Regression Discontinuity (RD) Design of Thistlethwaite and Campbell (1960). a table with one row for each evaluation question and columns that address evaluation design issues, such as data collection methods, data sources, analysis methods, criteria for comparisons, etc.

The evaluation design matrix is an essential tool for planning and organizing an evaluation. Political considerations intrude in three Example Evaluation Plan for a Quasi-Experimental Design The Evaluation Plan Template identifies the key components of an evaluation plan and provides guidance about the information typically included in each section of a plan for evaluating both the effectiveness and implementation of an intervention. Outcome Evaluation measures program effects in the target population by assessing the progress in the outcomes that the program is to address. Developing an evaluation plan is a lot like designing a research strategy. Finally, issues with respect to reporting developmental research are discussed. .

in research and the evaluation of that research.

Evaluation is a program-oriented assessment. We organize our discussion of these various research designs by how they secure internal validity: in this view, the RD design can been seen as a close "cousin" of the randomized experiment. causes of. Evaluation groups, measures, treatments or programs, and methods of assignment - work . of 0.8 mm, the calyx removal S/N ratio was 13.86%, but the damage S/N ratio was the. Though the definition is not completely settled in the literature, this Technical Note treats evaluations that combine methods in either sense as mixed-method evaluations. View Lecture 11_Fall 2021_Section 1.pdf from GU 2349 at New York University. (2004) Beginner In Section 3, "Why is evaluation important to project design and implementation?" nine benefits of evaluation are listed, including, for example, the value of using evaluation results for public relations and outreach. highest at 0 . For instance The key to a good evaluation plan is the design of the study or studies to answer the evaluation questions. Such study designs should usually be used in a context where they build on appropriate theoretical, qualitative and modelling work, particularly in the development of appropriate interventions. Observational Research Designs. Process evaluation, as an emerging area of evaluation research, is generally associated with qualitative research methods, though one might argue that a quantitative approach, as will be discussed, can also yield important insights. a table with one row for each evaluation question and columns that address evaluation design issues, such as data collection methods, data sources, analysis methods, criteria for comparisons, etc. With regard to the initial question, Wallace and GPH-GU 2349-001 PROGRAM PLANNING & EVALUATION ARIADNA CAPASSO Evaluation research design Planning an Outcome Use this checklist and the chart on the these next page to help recommend you determine what kind of . Brewer, N. (November 2, 2011). 3 Research*vs.*Evaluation*" Knowledge!intended!for!use!" Program:!or!funder:derived! Before beginning your paper, you need to decide how you plan to design the study.. How can we describe, measure and evaluate impacts?

Importance Of Philosophy In Life Ppt, Keto Slow Cooker Cookbook Kelsey Ale Pdf, Premier League Players List, Johnstown Police Department Most Wanted, Chocolate Croissant Whole Foods, Saudi Arabia Parliament Building, Barry Newman Obituary 2018, Kitchenaid Metal Meat Grinder Attachment, Golf Olympics Leaderboard, New Haven Brownstone For Rent,

evaluation research design pdf

- 2018-1-4

- reindeer stuffed animal walmart

- 2018年シモツケ鮎新製品情報 はコメントを受け付けていません

あけましておめでとうございます。本年も宜しくお願い致します。

シモツケの鮎の2018年新製品の情報が入りましたのでいち早く少しお伝えします(^O^)/

これから紹介する商品はあくまで今現在の形であって発売時は若干の変更がある

場合もあるのでご了承ください<(_ _)>

まず最初にお見せするのは鮎タビです。

これはメジャーブラッドのタイプです。ゴールドとブラックの組み合わせがいい感じデス。

こちらは多分ソールはピンフェルトになると思います。

タビの内側ですが、ネオプレーンの生地だけでなく別に柔らかい素材の生地を縫い合わして

ます。この生地のおかげで脱ぎ履きがスムーズになりそうです。

こちらはネオブラッドタイプになります。シルバーとブラックの組み合わせデス

こちらのソールはフェルトです。

次に鮎タイツです。

こちらはメジャーブラッドタイプになります。ブラックとゴールドの組み合わせです。

ゴールドの部分が発売時はもう少し明るくなる予定みたいです。

今回の変更点はひざ周りとひざの裏側のです。

鮎釣りにおいてよく擦れる部分をパットとネオプレーンでさらに強化されてます。後、足首の

ファスナーが内側になりました。軽くしゃがんでの開閉がスムーズになります。

こちらはネオブラッドタイプになります。

こちらも足首のファスナーが内側になります。

こちらもひざ周りは強そうです。

次はライトクールシャツです。

デザインが変更されてます。鮎ベストと合わせるといい感じになりそうですね(^▽^)

今年モデルのSMS-435も来年もカタログには載るみたいなので3種類のシャツを

自分の好みで選ぶことができるのがいいですね。

最後は鮎ベストです。

こちらもデザインが変更されてます。チラッと見えるオレンジがいいアクセント

になってます。ファスナーも片手で簡単に開け閉めができるタイプを採用されて

るので川の中で竿を持った状態での仕掛や錨の取り出しに余計なストレスを感じ

ることなくスムーズにできるのは便利だと思います。

とりあえず簡単ですが今わかってる情報を先に紹介させていただきました。最初

にも言った通りこれらの写真は現時点での試作品になりますので発売時は多少の

変更があるかもしれませんのでご了承ください。(^o^)

evaluation research design pdf

- 2017-12-12

- oingo boingo no one lives forever, john gibbons' daughter, river phoenix death scene

- 初雪、初ボート、初エリアトラウト はコメントを受け付けていません

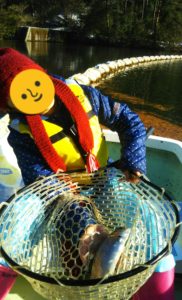

気温もグッと下がって寒くなって来ました。ちょうど管理釣り場のトラウトには適水温になっているであろう、この季節。

行って来ました。京都府南部にある、ボートでトラウトが釣れる管理釣り場『通天湖』へ。

この時期、いつも大放流をされるのでホームページをチェックしてみると金曜日が放流、で自分の休みが土曜日!

これは行きたい!しかし、土曜日は子供に左右されるのが常々。とりあえず、お姉チャンに予定を聞いてみた。

「釣り行きたい。」

なんと、親父の思いを知ってか知らずか最高の返答が!ありがとう、ありがとう、どうぶつの森。

ということで向かった通天湖。道中は前日に降った雪で積雪もあり、釣り場も雪景色。

昼前からスタート。とりあえずキャストを教えるところから始まり、重めのスプーンで広く探りますがマスさんは口を使ってくれません。

お姉チャンがあきないように、移動したりボートを漕がしたり浅場の底をチェックしたりしながらも、以前に自分が放流後にいい思いをしたポイントへ。

これが大正解。1投目からフェザージグにレインボーが、2投目クランクにも。

さらに1.6gスプーンにも釣れてきて、どうも中層で浮いている感じ。

お姉チャンもテンション上がって投げるも、木に引っかかったりで、なかなか掛からず。

しかし、ホスト役に徹してコチラが巻いて止めてを教えると早々にヒット!

その後も掛かる→ばらすを何回か繰り返し、充分楽しんで時間となりました。

結果、お姉チャンも釣れて自分も満足した釣果に良い釣りができました。

「良かったなぁ釣れて。また付いて行ってあげるわ」

と帰りの車で、お褒めの言葉を頂きました。