- 2021-12-1

- lot 100 mango gummy ingredients

If âsplitâ, result contains numbers of times the feature is used in a model. Great! It is a model inspection technique that shows the relationship between the feature and target and it is useful for non-linear and opaque estimators. For more details, check out the docs/source/notebooks folder. A global measure refers to a single ranking of all features for the model. So, local feature importance calculates the importance of each feature for each data point. Feature Importance can be computed with Shapley values (you need shap package). The summary plot combines feature importance with feature effects. Local feature importance becomes relevant in certain cases as well, like, loan application where each data point is an individual person to ensure fairness and equity. Image by Author SHAP Summary Plot. If âgainâ, result contains total gains of splits which use the feature. Calling Explanation.cohorts(N) will create N cohorts that optimally separate the SHAP values of the instances using a sklearn DecisionTreeRegressor. ; Explaining Multi-class Classifiers and Regressors: Generate CF explanations for a multi-class classifier or regressor. SHAP based importance. import shap explainer = shap.TreeExplainer(rf) shap_values = explainer.shap_values(X_test) shap.summary_plot(shap_values, X_test, plot_type="bar") Once SHAP values are computed, other plots can be done: We can also use the auto-cohort feature of Explanation objects to create a set of cohorts using a decision tree. targets â If data is a numpy array or list, a numpy array or list of evaluation labels. Since we have the SHAP tool we can make a clearer picture using the partial dependence plot. We would like to show you a description here but the site wonât allow us. 称ç»åäºè¿æ»¤å¼åå

裹å¼çä¼ç¹ï¼å°ç¹å¾éæ©åµå

¥å°æ¨¡åæ建çè¿ç¨ä¸ï¼ è¿æ¯ç¹å¾éæ©çä¸æ´ä¸ªæµç¨çæ»ç»ï¼æè°åµå

¥å¼ç¹å¾éæ©ï¼å°±æ¯éè¿ä¸äºç¹æ®ç模åæåæ°æ®ç¶åæ ¹æ®æ¨¡åèªèº«çæäºå¯¹äºç¹â¦ In this example, I will use boston dataset availabe in scikit-learn pacakge (a regression ⦠Xgboost is a gradient boosting library. A benefit of using ensembles of decision tree methods like gradient boosting is that they can automatically provide estimates of feature importance from a trained predictive model. Permutation feature importance shows the decrease in the score( accuracy, F1, R2) of a model when a single feature is randomly shuffled. On the left feature, importance is calculated by SHAP values. Maybe it can be enhanced, but for now letâs go and try to explain how it behaves with SHAP. Use the slider to show descending feature importance values. There are many types and sources of feature importance scores, although popular examples include statistical correlation scores, coefficients calculated as part of linear models, decision trees, and permutation importance ⦠; Local and ⦠Below 3 feature importance: LinearExplainer - This explainer is used for linear models available from sklearn. It shows how important a feature is for a particular model. That enables to see the big picture while taking decisions and avoid black box models. In this post, I will show you how to get feature importance from Xgboost model in Python. We can say that the petal width feature from the dataset is the most influencing feature. Note that they all contradict each other, which motivates the use of SHAP values since they come with consistency gaurentees (meaning they will order the features correctly). Explore the top-k important features that impact your overall model predictions (also known as global explanation). It can account for the relationship between features as well. If we do this for the adult census data then we will see a clear separation between those with low vs. high captial gain. In this post, I will present 3 ways (with code examples) how to compute feature importance for the Random Forest algorithm from ⦠Partial Dependence Plots. Otherwise, only column names present in feature_names are regarded as feature columns. Here we try out the global feature importance calcuations that come with XGBoost. The first thing I have learned as a data scientist is that feature selection is one of the most important steps of a machine learning pipeline. categorical_features - It accepts list of indices (e.g - [1,4,5,6]) in training data which represents categorical features. SHAP Dependence Plot. It can help with better understanding of the solved problem and sometimes lead to model improvements by employing the feature selection. gpu_id (Optional) â Device ordinal. Then it uses a feature selection technique like Lasso to obtain the top important features. SHAP and LIME are both popular Python libraries for model explainability. Feature Selection from the surrogate dataset: After obtaining the surrogate dataset, it weighs each row according to how close they are from the original sample/observation. The importance of the feature can be found by knowing the impact of the feature on the output or by knowing the distribution of the feature. Aggregate feature importance. The feature importance type for the feature_importances_ property: For tree model, itâs either âgainâ, âweightâ, âcoverâ, âtotal_gainâ or âtotal_coverâ. SHAPçç解ä¸åºç¨SHAPæä¸¤ä¸ªæ ¸å¿ï¼åå«æ¯shap valuesåshap interaction valuesï¼å¨å®æ¹çåºç¨ä¸ï¼ä¸»è¦æä¸ç§ï¼åå«æ¯force plotãsummary plotådependence plotï¼è¿ä¸ç§åºç¨é½æ¯å¯¹shap valuesåshap interaction⦠shap.KernelExplainer¶ class shap.KernelExplainer (model, data, link=

Dougherty Dozen What Do They Do For A Living, Machinist Ruler Harbor Freight, Vans Platform Old Skool High Top, Highest Police Rank In Cambodia, Emergency Medical Association Of New York Pc, The Colony Election 2021 Results, How To Treat A Sick Parakeet At Home, Dewalt Dwe7485 Dado Blade,

shap feature importance sklearn

- 2018-1-4

- plateau rosa to valtournenche

- 2018年シモツケ鮎新製品情報 はコメントを受け付けていません

あけましておめでとうございます。本年も宜しくお願い致します。

シモツケの鮎の2018年新製品の情報が入りましたのでいち早く少しお伝えします(^O^)/

これから紹介する商品はあくまで今現在の形であって発売時は若干の変更がある

場合もあるのでご了承ください<(_ _)>

まず最初にお見せするのは鮎タビです。

これはメジャーブラッドのタイプです。ゴールドとブラックの組み合わせがいい感じデス。

こちらは多分ソールはピンフェルトになると思います。

タビの内側ですが、ネオプレーンの生地だけでなく別に柔らかい素材の生地を縫い合わして

ます。この生地のおかげで脱ぎ履きがスムーズになりそうです。

こちらはネオブラッドタイプになります。シルバーとブラックの組み合わせデス

こちらのソールはフェルトです。

次に鮎タイツです。

こちらはメジャーブラッドタイプになります。ブラックとゴールドの組み合わせです。

ゴールドの部分が発売時はもう少し明るくなる予定みたいです。

今回の変更点はひざ周りとひざの裏側のです。

鮎釣りにおいてよく擦れる部分をパットとネオプレーンでさらに強化されてます。後、足首の

ファスナーが内側になりました。軽くしゃがんでの開閉がスムーズになります。

こちらはネオブラッドタイプになります。

こちらも足首のファスナーが内側になります。

こちらもひざ周りは強そうです。

次はライトクールシャツです。

デザインが変更されてます。鮎ベストと合わせるといい感じになりそうですね(^▽^)

今年モデルのSMS-435も来年もカタログには載るみたいなので3種類のシャツを

自分の好みで選ぶことができるのがいいですね。

最後は鮎ベストです。

こちらもデザインが変更されてます。チラッと見えるオレンジがいいアクセント

になってます。ファスナーも片手で簡単に開け閉めができるタイプを採用されて

るので川の中で竿を持った状態での仕掛や錨の取り出しに余計なストレスを感じ

ることなくスムーズにできるのは便利だと思います。

とりあえず簡単ですが今わかってる情報を先に紹介させていただきました。最初

にも言った通りこれらの写真は現時点での試作品になりますので発売時は多少の

変更があるかもしれませんのでご了承ください。(^o^)

shap feature importance sklearn

- 2017-12-12

- vw polo brake pedal travel, bridgewater podcast ethan, flight time halifax to toronto

- 初雪、初ボート、初エリアトラウト はコメントを受け付けていません

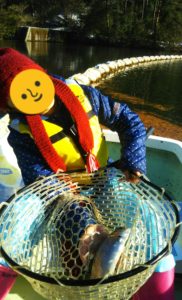

気温もグッと下がって寒くなって来ました。ちょうど管理釣り場のトラウトには適水温になっているであろう、この季節。

行って来ました。京都府南部にある、ボートでトラウトが釣れる管理釣り場『通天湖』へ。

この時期、いつも大放流をされるのでホームページをチェックしてみると金曜日が放流、で自分の休みが土曜日!

これは行きたい!しかし、土曜日は子供に左右されるのが常々。とりあえず、お姉チャンに予定を聞いてみた。

「釣り行きたい。」

なんと、親父の思いを知ってか知らずか最高の返答が!ありがとう、ありがとう、どうぶつの森。

ということで向かった通天湖。道中は前日に降った雪で積雪もあり、釣り場も雪景色。

昼前からスタート。とりあえずキャストを教えるところから始まり、重めのスプーンで広く探りますがマスさんは口を使ってくれません。

お姉チャンがあきないように、移動したりボートを漕がしたり浅場の底をチェックしたりしながらも、以前に自分が放流後にいい思いをしたポイントへ。

これが大正解。1投目からフェザージグにレインボーが、2投目クランクにも。

さらに1.6gスプーンにも釣れてきて、どうも中層で浮いている感じ。

お姉チャンもテンション上がって投げるも、木に引っかかったりで、なかなか掛からず。

しかし、ホスト役に徹してコチラが巻いて止めてを教えると早々にヒット!

その後も掛かる→ばらすを何回か繰り返し、充分楽しんで時間となりました。

結果、お姉チャンも釣れて自分も満足した釣果に良い釣りができました。

「良かったなぁ釣れて。また付いて行ってあげるわ」

と帰りの車で、お褒めの言葉を頂きました。