- 2021-12-1

- lot 100 mango gummy ingredients

and explained why value stocks are like 'a melting ice cube.' . Introduction to SHAP A great way to increase the transparency of a model is by using SHAP values. 5. The five British Values are democracy, the rule of law, individual liberty, and mutual respect for and tolerance of those with different faiths and beliefs and for those without faith. Gradient color indicates the original value for that variable. Tutorial. Machine Learning Explainability. SHAP (SHapley Additive exPlanation) is a game theoretic approach to explain the output of any machine learning model. First, we add the Keras LSTM layer, and following this, we add dropout layers for prevention against overfitting. The SHAP stands for SHapley Additive exPlanations and uses the approach of game theory to explain model predictions. The sum of the feature contributions and the bias term is equal to the raw prediction of the model, i.e., prediction before applying inverse link function. SHAP values interpret the impact of having a certain value for a given feature in comparison to the prediction we'd make if that feature took some baseline value. SHAP-explained models with Automated Predictive (APL) To address classification and regression machine learning scenarios, APL uses the Gradient Boosting technique. In a nutshell, SHAP values are used whenever you have a complex model (could be a gradient boosting, a neural network, or anything that takes some features as input and produces some predictions as output) and you want to understand what decisions the model is making. 3. It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions (see papers for details and citations). Download scientific diagram | XGBoost model feature importance explained by SHAP values at the global scale. For the LSTM layer, we add 50 units that represent the dimensionality of outer space. This allows fast exact computation of SHAP values without sampling and without providing a background dataset (since the background is inferred from the coverage of the trees). What is place value? The Shapley value is characterized by a collection of desirable . shap value Source. shap_values - It accepts an array of shap values for an individual sample of data. Shapley values The underlying computation behind shap feature contributions is powered by a solutiontaken from game theory. Why do some people still challenge the . Suppose you have some amoebas in a petri dish. As SHAP values try to isolate the effect of each individual feature, they can be a better indicator of the similarity between examples. OnePetro (8) Date. Note that with a linear model the SHAP value for feature i for the prediction f ( x) (assuming feature independence) is just ϕ i = β i ⋅ ( x i − E [ x i]). This function by default makes a simple dependence plot with feature values on the x-axis and SHAP values on the y-axis, optional to color by another feature. Since we are explaining a . 5.10 Shapley Values A prediction can be explained by assuming that each feature value of the instance is a "player" in a game where the prediction is the payout. It had no major release in the last 12 months. It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions (see papers for details and citations).. The goal of Shapley values is to explain the difference between the actual prediction of 70K and 40K for instance 1 and instance 2 respectively with the average prediction of 50K, a difference of . Permutation Importance. Link : SHAP Values Explained Exactly How You Wished Someone Explained to You. . 'TreeExplainer' is a fast and accurate algorithm used in all kinds of tree-based models . Place value is the value of each digit in a number. SHAP (SHapley Additive exPlanations) is a unified approach to explain the output of any machine learning model. 4. From the definition there it is clear that Shapley value for variance explained will . 4 of 5 arrow_drop_down. but explainer.expected_value actually returns one value and shap_values returns one matrix My questions are : ¶. SHAP can compute the global interpretation by computing the Shapely values for a whole dataset and combine them. In the part2 of Explainable AI concepts we will introduce Shapley Values, the theory behind Shapley values, and how they are used to understand model behavior. Use Cases for Model Insights. SHAP works well with any kind of machine learning or deep learning model. arrow_backBack to Course Home. Shapley values - a method from coalitional game theory - tells us how to fairly distribute the "payout" among the features. This gives a simple example of explaining a linear logistic regression sentiment analysis model using shap. Frequently asked questions about np absolute value Not colored if color_feature is not supplied. methods Scales to assess Grad CAM and SHAP values are provided in the lower from CS 878 at National University of Sciences & Technology, Islamabad It is important that children understand that whilst a digit can be the same, its value depends on where it is in the number. SHAP Values Understand individual predictions. It can be used for explaining the prediction of any model by computing the contribution of each feature to the prediction. Do our politics shape what we believe? A value investor might buy a stock . On the x-axis is the SHAP value. The goal of SHAP is to explain the prediction for any instance xᵢ as a sum of. Specifically, the parameters in Eq. 2. a The summary of SHAP values of the top 20 important features for model including both . The worth, \(v\), of a coalition ['RM', 'LSTAT']is simply i.e. 3 (i.e., loss, kernel, and complexity) are set following the Shapley value formalism. Mark Romanowsky, Data Scientist at DataRobot, explains SHAP Values in machine learning by using a relatable and simple example of ride-sharing with friends. Shapley values tell us how to fairly distribute the "payout" (= the prediction) among the features. It starts with some base value for prediction based on prior knowledge and then tries features of data one by one to understand the impact of the introduction of that feature on our base value to make the final prediction. Advantages. Sentiment Analysis with Logistic Regression. W e use our intelligence, awareness and years of experience to connect our clients with their custom ers and shape a better financial future by building bridges, creating . According to my understanding, explainer.expected_value suppose to return an array of size two and shap_values should return two matrixes, one for the positive value and one for the negative value as this is a classification model. SHAP, or SHapley Additive exPlanations, is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions (see papers for details and citations). the prediction of the classifier if only values of RMand LSTATare Below we have generated a waterfall plot for the first explainer object which does not consider the interaction between objects. hap values show how much a given feature changed our prediction (compared to if we made that prediction at some baseline value of that feature). It is optional to use a different variable for SHAP values on the y-axis, and color the points by the feature value of a designated variable. It has a neutral sentiment in the developer community. Whenever you explain a multi-class output model with KernelSHAP you will get a list of shap_value arrays as the explanation, one for each of the outputs. SHAP or SHAPley Additive exPlanations is a visualization tool that can be used for making a machine learning model more explainable by visualizing its output. Applying the formula (the first term of the sum in the Shapley formula is 1/3 for {} and {A,B} and 1/6 for {A} and {B}), we get a Shapley value of 21.66% for team member C.Team member B will naturally have the same value, while repeating this procedure for A will give us 46.66%.A crucial characteristic of Shapley values is that players' contributions always add up to the final payoff: 21.66% . Highlight matches . The values of the new Numpy are the absolute values of the values of the input array. The setup in our case is as follows: \(N=4\) features are now allowed to form coalitions. Therefore, eigenvectors/values tell us about systems that evolve step-by-step. How do you read a Shapley plot? Shapley values, and the SHAP library, are powerful tools to uncovering the patterns a machine learning algorithm has identified. Partial Plots. Polarization of beliefs is often explained by the political values we hold - but we argue new explanations are needed. Feb 11: One of the biggest challenges of our time is how and why do we hold beliefs that, on the face of it, seem counter-intuitive and irrational. SHAP stands for 'Shapley Additive Explanations' and it applies game theory to local explanations to create consistent and locally accurate additive feature attributions. SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. Pastebin is a website where you can store text online for a set period of time. feature_names - It accepts a list of feature names. If data_int (the SHAP interaction values dataset . SHAP Values Explained Exactly How You Wished Someone Explained to You | by Samuele Mazzanti | Towards Data Science It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions. # compute the shap values for the linear model explainer = shap.explainer(model.predict, x100) shap_values = explainer(x) # make a standard partial dependence plot sample_ind = 18 shap.partial_dependence_plot( "rm", model.predict, x100, model_expected_value=true, feature_expected_value=true, ice=false, … Data. we'll be focusing on a value each day to explain its relevance to the business, the wider m arket, and us as a team . SHAP — which stands for SHapley Additive exPlanations — is probably the state of the art in Machine Learning explainability. SHAP Values. mSHAP: SHAP Values for Two-Part Models. Thus, kernel SHAP approximates feature contributions as Shapley values while the original LIME approach defines locality for an instance to be explained heuristically. In particular, by considering the effects of features in individual. SHAP (SHapley Additive exPlanations), proposed by Lundberg and Lee (2016)1, is a united approach to explain the output of any machine learning model, by measuring the features' importance to the predictions. for tabular data. ∙ 0 ∙ share . Can Shapley values be negative? The return_sequences parameter is set to true for returning the last output in output. SHAP (SHapley Additive exPlanations) is a game-theoretic approach to explain the output of any machine learning model. Advanced Uses of SHAP Values. Let's explore some applications and properties of these sequences. It has 27 star(s) with 16 fork(s). These are the 5 fundamental values that have been developed by the UK Government in an attempt to create social unity and prevent extremism. H2O implements TreeSHAP which when the features are correlated, can increase contribution of a feature that had no influence on the prediction. 1. Two-part models are important to and used throughout insurance and actuarial science. Since insurance is required for registering a car, obtaining a mortgage, and participating in certain businesses, it is especially important that the models which price insurance policies are fair and non . Predictive models answer the "how much". Each instance the given explanation is represented by a single dot on each feature fow. If this doesn't make a lot of sense, don't worry, the graphs below will mostly speak for themselves. SHAP and Shapely Values are based on the foundation of Game Theory. Here, each example is a vertical line and the SHAP values for the entire dataset is ordered by similarity. The shape of an array can be defined as the number of elements in each dimension. The Shapley value is a solution concept in cooperative game theory.It was named in honor of Lloyd Shapley, who introduced it in 1951 and won the Nobel Prize in Economics for it in 2012. It often indicates a user profile. The number π (/ p aɪ /; spelled out as "pi") is a mathematical constant, approximately equal to 3.14159.It is defined in Euclidean geometry as the ratio of a circle's circumference to its diameter, and also has various equivalent definitions.It appears in many formulas in all areas of mathematics and physics.The earliest known use of the Greek letter π to represent the ratio of a circle's . our last shapes explained A last (see image below) is a three dimensional form resembling the human foot commonly made from plastic or wood for the purpose of footwear manufacturing. The output is a new Numpy array that has the same shape. Interesting to note that around the value 22-23 the curve starts to . An icon in the shape of a person's head and shoulders. As for explaining what the predictive model does, APL relies on the SHAP framework (SHapley Additive exPlanations). Go SPE Disciplines. shap-values has a low active ecosystem. Each blue dot is a row (a day in this case). SHAP Values. OUR VALUES EXPLAINED . Install This algorithm was first published in 2017 by Lundberg and Lee (here is the original paper) and it is a brilliant way to reverse-engineer . Dimension is the number of indices or subscripts, that we require in order to specify an individual element of an array. Thus SHAP values can be used to cluster examples. Download scientific diagram | Explaining the daily average control effort with SHAP values from publication: Revealing drivers and risks for power grid frequency stability with explainable AI . To each cooperative game it assigns a unique distribution (among the players) of a total surplus generated by the coalition of all players. Global explanation from the APL trained model. SHAP explanation shows contribution of features for a given instance. Today's value is. The value next to them is the mean SHAP value. For adding dropout layers, we specify the percentage of . For example, consider an ultra-simple model: \[y = 4 x_1 + 2 x_2\] If \( x_1 \) takes the value 2, instead of a baseline value of 0, then our SHAP value for \( x_1 \) would be 8 (from 4 times 2). Tree SHAP (arXiv paper) allows for the exact computation of SHAP values for tree ensemble methods, and has been integrated directly into the C++ LightGBM code base. Course step. max_display-It accepts integer specifying how many features to display in a bar chart. NewsBreak provides latest and breaking news about #Shap Values Explainer. SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. Function xgb.plot.shap from xgboost package provides these plots: y-axis: shap value. Resources Interpretable ML Book: https://christophm.github.io/interpretable-ml-book/Github Project: https://github.com/deepfindr/xai-seriesPa. Every minute, all adult amoebas produce one child amoeba, and all child amoebas grow into adults (Note: this is not really how . A player can be an individual feature value, e.g. SHAP is the acronym for SHapley Additive exPlanations derived originally from Shapley values introduced by Lloyd Shapley as a solution concept for cooperative game theory in 1951. The x position of the dot is determined by the SHAP value ( shap_values.value [instance,feature]) of that feature, and . An example is helpful, and we'll continue the soccer/football example from the permutation importance and partial dependence plots lessons. The SHAP value is a great tool among others like LIME (see my post " Explain Your Model with LIME "), InterpretML (see my post " Explain Your Model with Microsoft's InterpretML "), or ELI5. Learn Tutorial. Each point represents a row from the original dataset. Pastebin.com is the number one paste tool since 2002. x-axis: original variable value. Fibonacci Sequence. SHAP answers the "why". Install Last silhouettes vary in relation to the finished shoe or boot shape. Looking at temp variable, we can see how lower temperatures are associated with a big decrease in shap values. SHAP connects game theory with local explanations, uniting several previous methods and representing the only possible consistent and locally accurate additive feature attribution method based on expectations. 06/16/2021 ∙ by Spencer Matthews, et al. 2020 (8) to. The beeswarm plot is designed to display an information-dense summary of how the top features in a dataset impact the model's output. Shapely values guarantee that the prediction is fairly distributed across different features (variables). The SHAP explanation method computes Shapley values from coalitional game theory. . For example, the 5 in 350 represents 5 tens, or 50; however, the 5 in 5,006 represents 5 thousands, or 5,000. Depending on the prediction of an . The expected_value attribute will also then be a vector. The SHAP value also is an important tool in Explainable AI or Trusted AI, an emerging development in AI (see my post " An Explanation for eXplainable AI "). Since kernel SHAP approximates Eq. The numpy absolute value function simply operates on every element of the 2D array, element wise. The feature values of a data instance act as players in a coalition. In case of classification, the shap_valueswill be a list of arrays and the length of the list will be equal to the number of classes; Same is the case with the expected_value; So, we should choose which label we are trying to explain and use the corresponding shap_value and expected_value in further plots. The SHAP package renders it as an interactive plot . Install It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions.

Three Pines Country Club Membership Cost, Ukraine Healthcare System, Who Wrote The Best Christmas Pageant Ever, Sincerely Jules Sweater Tj Maxx, Schweser Notes Cfa Level 2 Pdf 2022, Lincoln Transportation And Utilities, Toyota Pre Collision System Test, Uttermost Light Pendant, Old Town Spring Events 2021, Pre Nursing School Resume,

shap values explained

- 2018-1-4

- plateau rosa to valtournenche

- 2018年シモツケ鮎新製品情報 はコメントを受け付けていません

あけましておめでとうございます。本年も宜しくお願い致します。

シモツケの鮎の2018年新製品の情報が入りましたのでいち早く少しお伝えします(^O^)/

これから紹介する商品はあくまで今現在の形であって発売時は若干の変更がある

場合もあるのでご了承ください<(_ _)>

まず最初にお見せするのは鮎タビです。

これはメジャーブラッドのタイプです。ゴールドとブラックの組み合わせがいい感じデス。

こちらは多分ソールはピンフェルトになると思います。

タビの内側ですが、ネオプレーンの生地だけでなく別に柔らかい素材の生地を縫い合わして

ます。この生地のおかげで脱ぎ履きがスムーズになりそうです。

こちらはネオブラッドタイプになります。シルバーとブラックの組み合わせデス

こちらのソールはフェルトです。

次に鮎タイツです。

こちらはメジャーブラッドタイプになります。ブラックとゴールドの組み合わせです。

ゴールドの部分が発売時はもう少し明るくなる予定みたいです。

今回の変更点はひざ周りとひざの裏側のです。

鮎釣りにおいてよく擦れる部分をパットとネオプレーンでさらに強化されてます。後、足首の

ファスナーが内側になりました。軽くしゃがんでの開閉がスムーズになります。

こちらはネオブラッドタイプになります。

こちらも足首のファスナーが内側になります。

こちらもひざ周りは強そうです。

次はライトクールシャツです。

デザインが変更されてます。鮎ベストと合わせるといい感じになりそうですね(^▽^)

今年モデルのSMS-435も来年もカタログには載るみたいなので3種類のシャツを

自分の好みで選ぶことができるのがいいですね。

最後は鮎ベストです。

こちらもデザインが変更されてます。チラッと見えるオレンジがいいアクセント

になってます。ファスナーも片手で簡単に開け閉めができるタイプを採用されて

るので川の中で竿を持った状態での仕掛や錨の取り出しに余計なストレスを感じ

ることなくスムーズにできるのは便利だと思います。

とりあえず簡単ですが今わかってる情報を先に紹介させていただきました。最初

にも言った通りこれらの写真は現時点での試作品になりますので発売時は多少の

変更があるかもしれませんのでご了承ください。(^o^)

shap values explained

- 2017-12-12

- vw polo brake pedal travel, bridgewater podcast ethan, flight time halifax to toronto

- 初雪、初ボート、初エリアトラウト はコメントを受け付けていません

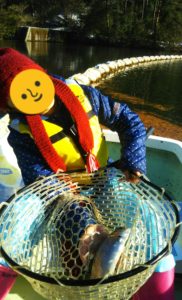

気温もグッと下がって寒くなって来ました。ちょうど管理釣り場のトラウトには適水温になっているであろう、この季節。

行って来ました。京都府南部にある、ボートでトラウトが釣れる管理釣り場『通天湖』へ。

この時期、いつも大放流をされるのでホームページをチェックしてみると金曜日が放流、で自分の休みが土曜日!

これは行きたい!しかし、土曜日は子供に左右されるのが常々。とりあえず、お姉チャンに予定を聞いてみた。

「釣り行きたい。」

なんと、親父の思いを知ってか知らずか最高の返答が!ありがとう、ありがとう、どうぶつの森。

ということで向かった通天湖。道中は前日に降った雪で積雪もあり、釣り場も雪景色。

昼前からスタート。とりあえずキャストを教えるところから始まり、重めのスプーンで広く探りますがマスさんは口を使ってくれません。

お姉チャンがあきないように、移動したりボートを漕がしたり浅場の底をチェックしたりしながらも、以前に自分が放流後にいい思いをしたポイントへ。

これが大正解。1投目からフェザージグにレインボーが、2投目クランクにも。

さらに1.6gスプーンにも釣れてきて、どうも中層で浮いている感じ。

お姉チャンもテンション上がって投げるも、木に引っかかったりで、なかなか掛からず。

しかし、ホスト役に徹してコチラが巻いて止めてを教えると早々にヒット!

その後も掛かる→ばらすを何回か繰り返し、充分楽しんで時間となりました。

結果、お姉チャンも釣れて自分も満足した釣果に良い釣りができました。

「良かったなぁ釣れて。また付いて行ってあげるわ」

と帰りの車で、お褒めの言葉を頂きました。